10 Distributions

Continuous

10.1 Probability Density Functions

Although there are many more discrete distribution families, we will now consider some continuous distribution families. Most of what we have learned about discrete distributions applies to continuous distributions. However, there is a need of a name change for the probability mass function. In a discrete distribution, we can calculate an actual probability for a particular value in the sample space. In continuous distributions, doing so can be tricky. We can always calculate the probability that a score in a particular interval will occur. However, in continuous distributions, the intervals can become very small, approaching a width of 0. When that happens, the probability associated with that interval also approaches 0. Yet, some parts of the distribution are more probable than others. Therefore, we need a measure of probability that tells us the probability of a value relative to other values: the probability density function

Considering the entire sample space of a discrete distribution, all of the associated probabilities from the probability mass function sum to 1. In a probability density function, it is the area under the curve that must sum to 1. That is, there is a 100% probability that a value generated by the random variable will be somewhere under the curve. There is nowhere else for it to go!

However, unlike probability mass functions, probability density functions do not generate probabilities. Remember, the probability of any value in the sample space of a continuous variable is infinitesimal. We can only compare the probabilities to each other. To see this, compare the discrete uniform distribution and continuous uniform distribution in Figure 9.2. Both distributions range from 1 to 4. In the discrete distribution, there are 4 points, each with a probability of ¼. It is easy to see that these 4 probabilities of ¼ sum to 1. Because of the scale of the figure, it is not easy to see exactly how high the probability density function is in the continuous distribution. It happens to be ⅓. Why? First, it does not mean that each value has a ⅓ probability. There are an infinite number of points between 1 and 4 and it would be absurd if each of them had a ⅓ probability. The distance between 1 and 4 is 3. In order for the rectangle to have an area of 1, its height must be ⅓. What does that ⅓ mean, then? In the case of a single value in the sample space, it does not mean much at all. It is simply a value that we can compare to other values in the sample space. It could be scaled to any value, but for the sake of convenience it is scaled such that the area under the curve is 1.

Note that some probability density functions can produce values greater than 1. If the range of a continuous uniform distribution is less than 1, at least some portions of the curve must be greater than 1 to make the area under the curve equal 1. For example, if the bounds of a continuous distribution are 0 and ⅓, the average height of the probability density function would need to be 3 so that the total area is equal to 1.

10.2 Continuous Uniform Distributions

Unlike the discrete uniform distribution, the uniform distribution is continuous.1 In both distributions, there is an upper and lower bound and all members of the sample space are equally probable.

1 For the sake of clarity, the uniform distribution is often referred to as the continuous uniform distribution.

10.2.1 Generating random samples from the continuous uniform distribution

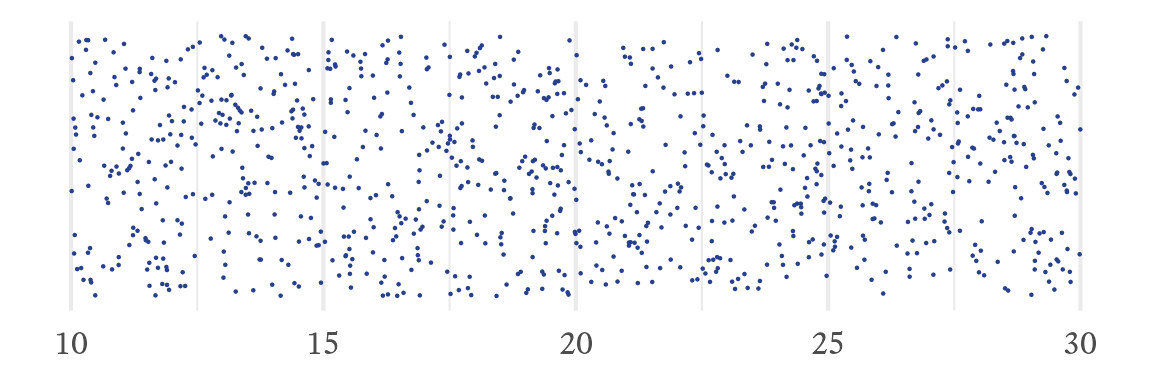

To generate a sample of numbers with a continuous uniform distribution between and , use the runif function like so:

# Sample size

n <- 1000

# Lower and upper bounds

a <- 10

b <- 30

# Sample

x <- runif(n, min = a, max = b)10.2.1.1 Using the continuous uniform distribution to generate random samples from other distributions

Uniform distributions can begin and end at any real number but one member of the uniform distribution family is particularly important—the uniform distribution between 0 and 1. If you need to use Excel instead of a statistical package, you can use this distribution to generate random numbers from many other distributions.

The cumulative distribution function of any continuous distribution converts into a continuous uniform distribution. A distribution’s quantile function converts a continuous uniform distribution into that distribution. Most of the time, this process also works for discrete distributions. This process is particularly useful for generating random numbers with an unusual distribution. If the distribution’s quantile function is known, a sample with a continuous uniform distribution can easily be generated and converted.

For example, the RAND function in Excel generates random numbers between 0 and 1 with a continuous uniform distribution. The BINOM.INV function is the binomial distribution’s quantile function. Suppose that (number of Bernoulli trials) is 5 and (probability of success on each Bernoulli trial) is 0.6. A randomly generated number from the binomial distribution with and is generated like so:

=BINOM.INV(5,0.6,RAND())

Excel has quantile functions for many distributions (e.g., BETA.INV, BINOM.INV, CHISQ.INV, F.INV, GAMMA.INV, LOGNORM.INV, NORM.INV, T.INV). This method of combining RAND and a quantile function works reasonably well in Excel for quick-and-dirty projects, but when high levels of accuracy are needed, random samples should be generated in a dedicated statistical program like R, Python (via the numpy package), Julia, STATA, SAS, or SPSS.

10.3 Normal Distributions

(Unfinished)

Image Credits

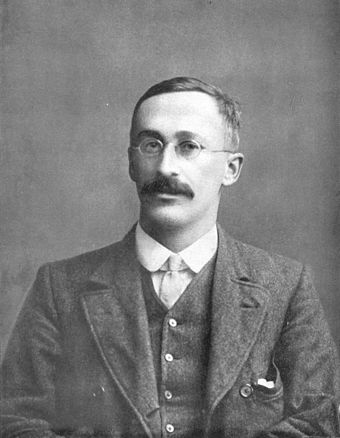

The normal distribution is sometimes called the Gaussian distribution after its discoverer, Carl Friedrich Gauss Figure 10.2. It is a small injustice that most people do not use Gauss’s name to refer to the normal distribution. Thankfully, Gauss is not exactly languishing in obscurity. He made so many discoveries that his name is all over mathematics and statistics.

The normal distribution is probably the most important distribution in statistics and in psychological assessment. In the absence of other information, assuming that an individual difference variable is normally distributed is a good bet. Not a sure bet, of course, but a good bet. Why? What is so special about the normal distribution?

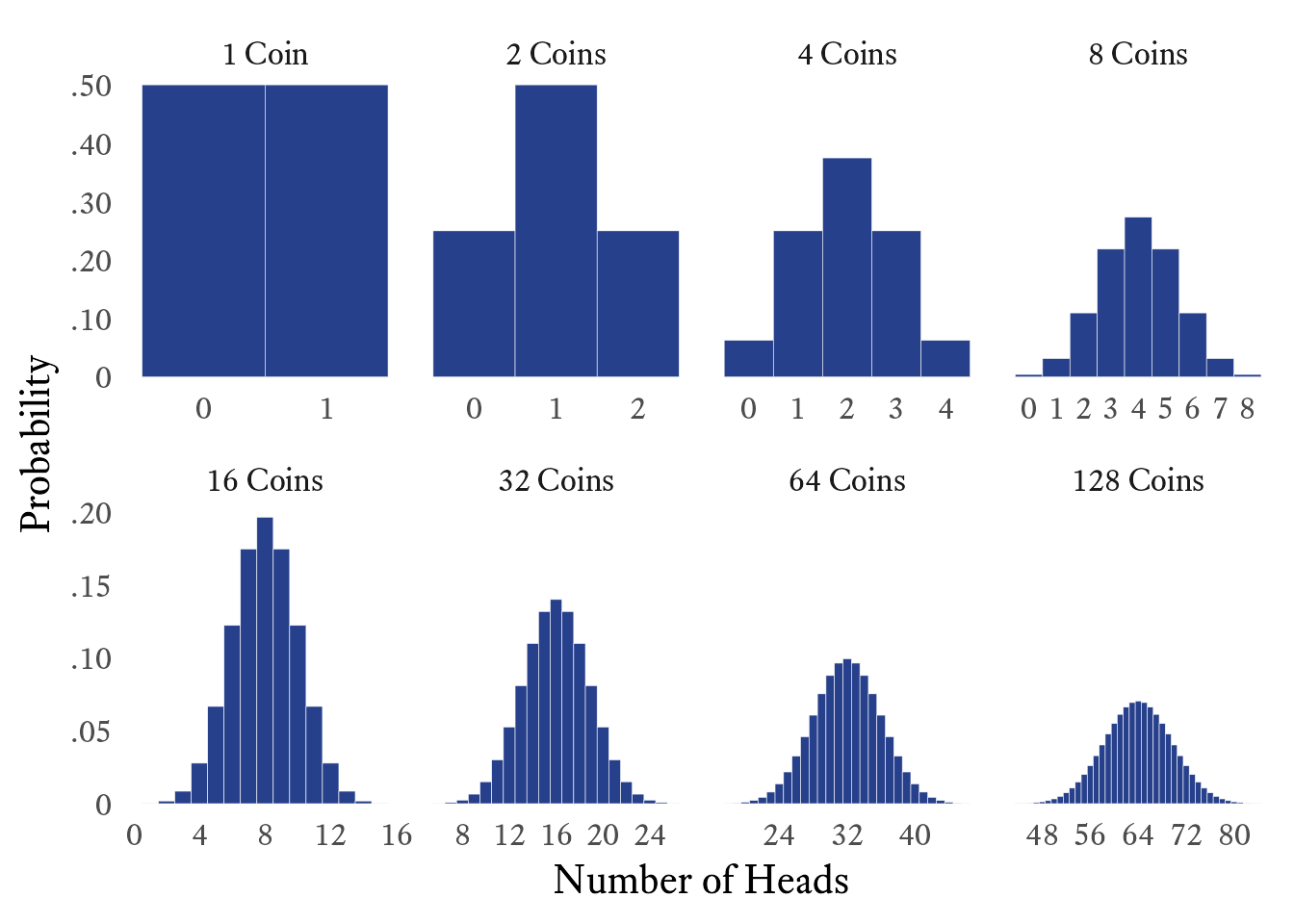

To get a sense of the answer to this question, consider what happens to the binomial distribution as the number of events () increases. To make the example more concrete, let’s assume that we are tossing coins and counting the number of heads . In Figure 10.3, the first plot shows the probability mass function for the number of heads when there is a single coin ). In the second plot, coins. That is, if we flip 2 coins, there will be 0, 1, or 2 heads. In each subsequent plot, we double the number of coins that we flip simultaneously. Even with as few as 4 coins, the distribution begins to resemble the normal distribution, although the resemblance is very rough. With 128 coins, however, the resemblance is very close.

This resemblance to the normal distribution in the example is not coincidental to the fact that , making the binomial distribution symmetric. If is extreme (close to 0 or 1), the binomial distribution is asymmetric. However, if is large enough, the binomial distribution eventually becomes very close to normal.

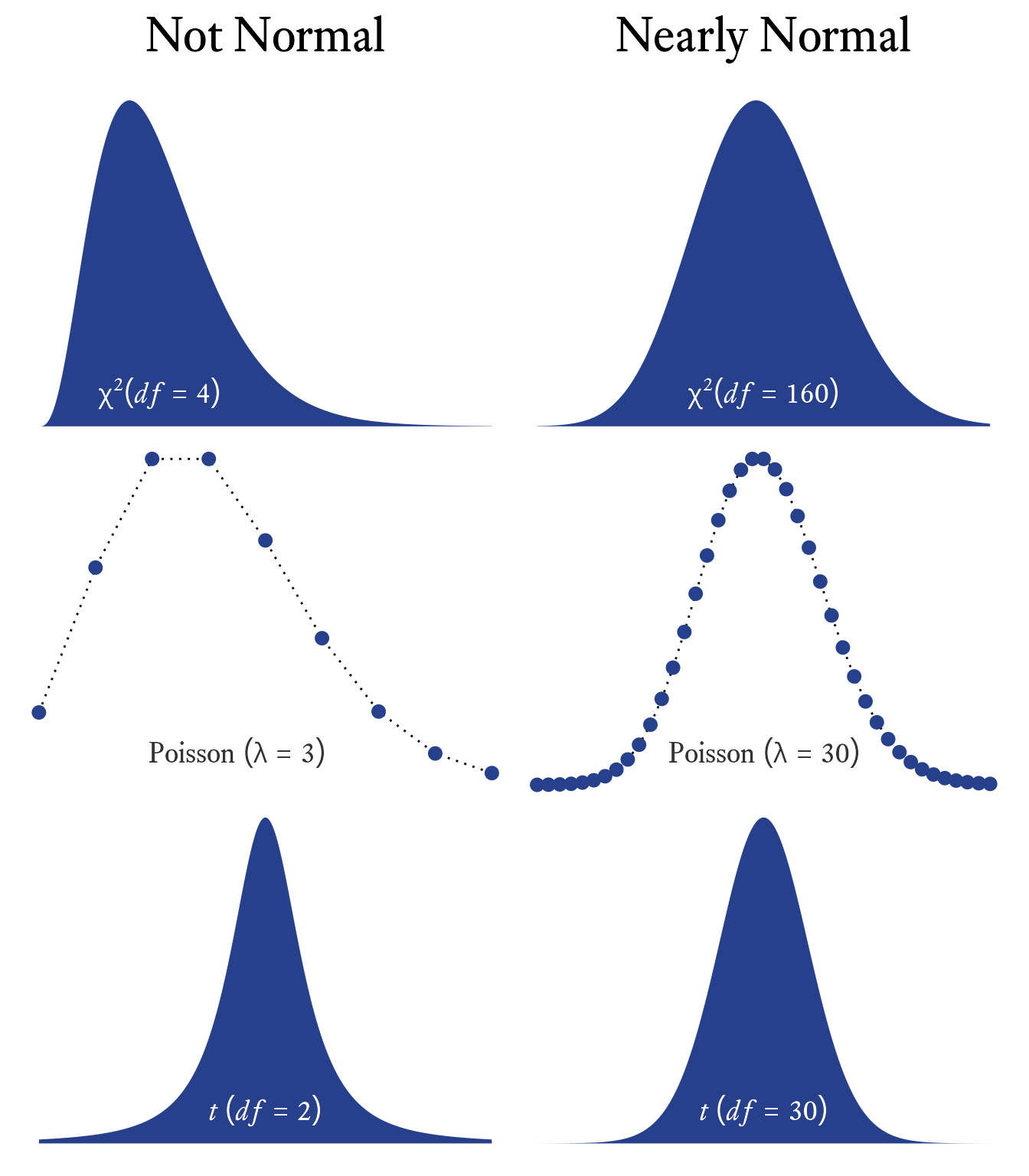

Many other distributions, such as the Poisson, Student’s T, F, and distributions, have distinctive shapes under some conditions but approximate the normal distribution in others (See Figure 10.4). Why? In the conditions in which non-normal distributions approximate the normal distribution, it is because, like in Figure 10.3, many independent events are summed.

10.3.1 Notation for Normal Variates

Statisticians write about variables with normal distributions so often that a compact notation for specifying a normal variable’s parameters was useful to develop. If I want to specify that is a normally variable with a mean of and a variance of , I will use this notation:

Many authors list the standard deviation instead of the variance . When I specify normal distributions with specific means and variances, I will avoid ambiguity by always showing the variance as the standard deviation squared. For example, a normal variate with a mean of 10 and a standard deviation of 3 will be written as .

10.3.2 Half-Normal Distribution

(Unfinished)

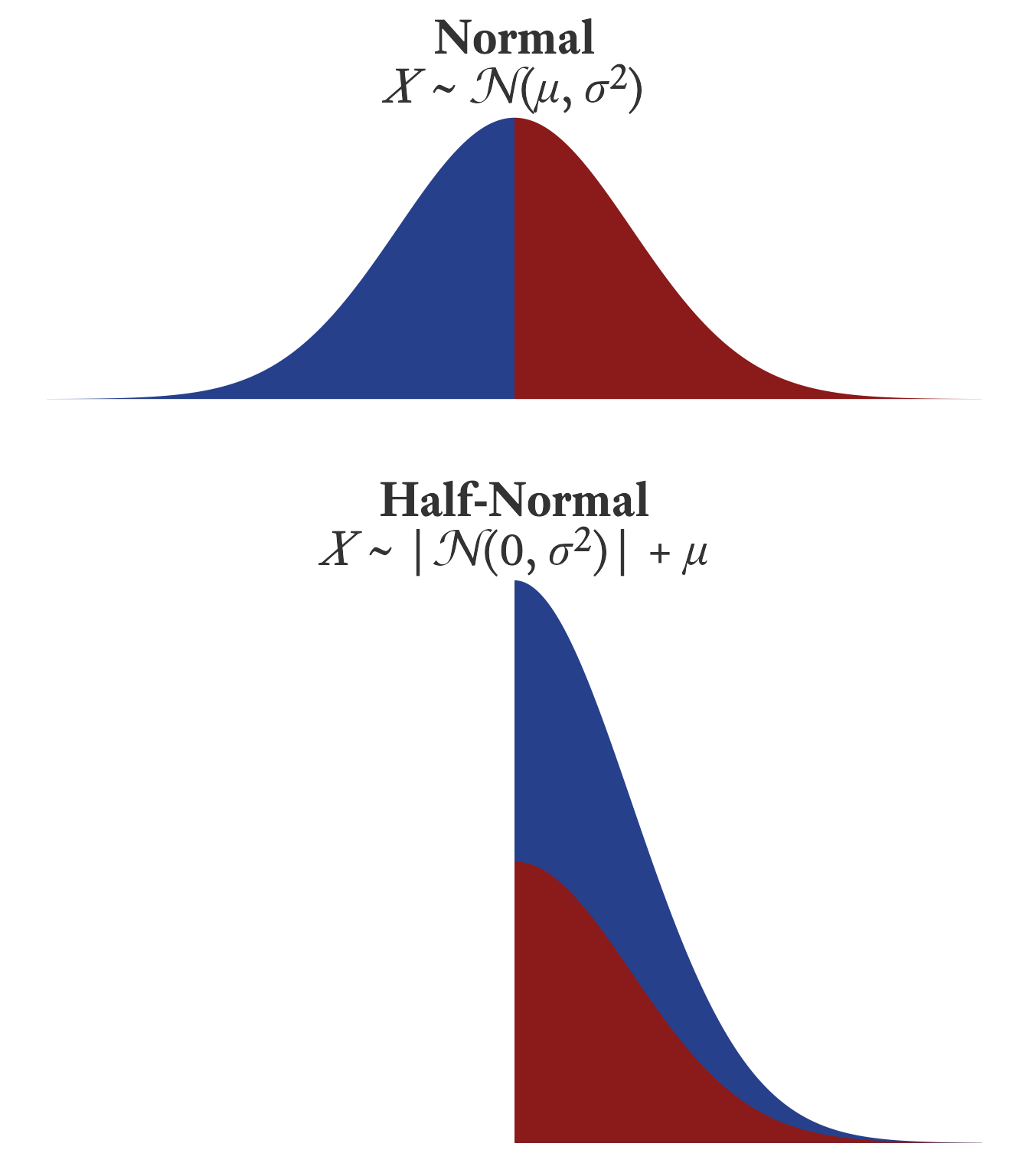

Suppose that is a normally distributed variable such that

Variable then has a half-normal distribution such that . In other words, imagine that a normal distribution is folded at the mean with the left half of the distribution now stacked on top of the right half of the distribution (See Figure 10.8).

10.3.3 Truncated Normal Distributions

(Unfinished)

10.3.4 Multivariate Normal Distributions

(Unfinished)

10.4 Chi Square Distributions

(Unfinished)

I have always thought that the distribution has an unusual name. The chi part is fine, but why square? Why not call it the distribution?2 As it turns out, the distribution is formed from squared quantities.

2 Actually, there is a distribution. It is simply the square root of the distribution. The half-normal distribution happens to be a distribution with 1 degree of freedom.

The distribution has a straightforward relationship with the normal distribution. It is the sum of multiple independent squared normal variates. That is, suppose is a standard normal variate:

In this case, has a distribution with 1 degree of freedom :

If and are independent standard normal variates, the sum of their squares has a distribution with 2 degrees of freedom:

If is a series of independent standard normal variates, the sum of their squares has a distribution with degrees of freedom:

10.4.1 Clinical Uses of the distribution

The distribution has many applications, but the mostly likely of these to be used in psychological assessment is the Test of Goodness of Fit and the Test of Independence.

The Test of Goodness of Fit tells us if observed frequencies of events differ from expected frequencies. Suppose we suspect that a child’s temper tantrums are more likely to occur on weekdays than on weekends. The child’s mother has kept a record of each tantrum for the past year and was able to count the frequency of tantrums. If tantrums were equally likely to occur on any day, 5 of 7 tantrums should occur on weekdays, and 2 of 7 tantrums should occur on weekends. The observed frequencies are compared with the expected frequencies below.

In the table above, if the observed frequencies are compared to their respective expected frequencies , then:

Using the cumulative distribution function, we find that the probability of observing the frequencies listed is low under the assumption that tantrums are equally likely each day.

observed_frequencies <- c(Weekday = 14, Weekend = 14)

expected_probabilities <- c(Weekday = 5, Weekend = 2) / 7

fit <- chisq.test(x = observed_frequencies,

p = expected_probabilities)

fit

Chi-squared test for given probabilities

data: observed_frequencies

X-squared = 6.3, df = 1, p-value = 0.01207# View expected frequencies and residuals

broom::augment(fit)# A tibble: 2 × 6

Var1 .observed .prop .expected .resid .std.resid

<fct> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Weekday 14 0.5 20 -1.34 -2.51

2 Weekend 14 0.5 8 2.12 2.51d_table <- tibble(A = rbinom(100, 1, 0.5)) |>

mutate(B = rbinom(100, 1, (A + 0.5) / 3)) |>

table()

d_table |>

as_tibble() |>

pivot_wider(names_from = A,

values_from = n) |>

knitr::kable(align = "lcc") |>

kableExtra::kable_styling(bootstrap_options = "basic") |>

kableExtra::collapse_rows() |>

kableExtra::add_header_above(header = c(` ` = 1, A = 2)) |>

html_table_width(400)| B | 0 | 1 |

|---|---|---|

| 0 | 47 | 21 |

| 1 | 11 |

fit <- chisq.test(d_table)

broom::augment(fit)# A tibble: 4 × 9

A B .observed .prop .row.prop .col.prop .expected .resid .std.resid

<fct> <fct> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0 0 47 0.47 0.810 0.691 39.4 1.20 3.28

2 1 0 21 0.21 0.5 0.309 28.6 -1.41 -3.28

3 0 1 11 0.11 0.190 0.344 18.6 -1.75 -3.28

4 1 1 21 0.21 0.5 0.656 13.4 2.06 3.2810.5 Student’s t Distributions

Image Credit

(Unfinished)

Guinness Beer gets free advertisement every time the origin story of the Student t distribution is retold, and statisticians retell the story often. The fact that the original purpose of the t distribution was to brew better beer seems too good to be true.

William Sealy Gosset (1876–1937), self-trained statistician and head brewer at Guinness Brewery in Dublin, continually experimented on small batches to improve and standardize the brewing process. With some help from statistician Karl Pearson, Gosset used then-current statistical methods to analyze his experimental results. Gosset found that Pearson’s methods required small adjustments when applied to small samples. With Pearson’s help and encouragement (and later from Ronald Fisher), Gosset published a series of innovative papers about a wide range of statistical methods, including the t distribution, which can be used to describe the distribution of sample means.

Worried about having its trade secrets divulged, Guinness did not allow its employees to publish scientific papers related to their work at Guinness. Thus, Gosset published his papers under the pseudonym, “A Student.” The straightforward names of most statistical concepts need no historical treatment. Few of us who regularly use the Bernoulli, Pareto, Cauchy, and Gumbell distributions could tell you anything about the people who discovered them. But the oddly named “Student’s t distribution” cries out for explanation. Thus, in the long run, it was Gosset’s anonymity that made him famous.

10.5.1 The t distribution’s relationship Relationship to the normal distribution.

Suppose we have two independent standard normal variates and .

A t distribution with one degree of freedom is created like so:

A t distribution with two degrees of freedom is created like so:

Where , and are independent standard normal variates.

A t distribution with degrees of freedom is created like so:

The sum of squared standard normal variates has a distribution with degrees of freedom, which has a mean of . Therefore, , on average, equals one. However, the expression has a variability approaches 0 as increases. When is high, is being multiplied by a value very close to 1. Thus, is nearly normal at high levels of .

10.6 Additional Distributions

10.6.1 F Distributions

Suppose that is the ratio of two independent variates and scaled by their degrees of freedom and , respectively:

The random variate will have an distribution with parameters, and .

The primary application of the distribution is to test the equality of variances in ANOVA. I am unaware of any direct applications of the F distribution in psychological assessment.

10.6.2 Weibull Distributions

How long do we have to wait before an event occurs? With Weibull distributions, we model wait times in which the probability of the event changes depending on how long we have waited. Some machines are designed to last a long time, but defects in a part might cause it fail quickly. If the machine is going to fail, it is likely to fail early. If the machine works flawlessly in the early period, we worry about it less. Of course, all physical objects wear out eventually, but a good design and regular maintenance might allow a machine to operate for decades. The longer machine has been working well, the less risk that it will irreparably fail on any particular day.

For some things, the risk of failure on any particular day becomes increasingly likely the longer it has been used. Biological aging causes increasing risk of death over time such that the historical records have no instances of anyone living beyond

For some events, there is a constant probability that the event will occur. For others, the probability is higher at first but becomes steadily less likely over time

the longer we wait the greater the probability will occur. For example, as animals age the probability of death accelerates such that beyond a certain age no individual as been observed to survive.

10.6.3 Unfinished

- Gumbel Distributions

- Beta Distributions

- Exponential Distributions

- Pareto Distributions